What's Really Happening With Deepseek Ai

본문

This instance showcases advanced Rust options corresponding to trait-primarily based generic programming, error handling, and higher-order features, making it a sturdy and versatile implementation for calculating factorials in different numeric contexts. 1. Error Handling: The factorial calculation might fail if the input string can't be parsed into an integer. This function takes a mutable reference to a vector of integers, and an integer specifying the batch size. This approach permits the function to be used with each signed (i32) and unsigned integers (u64). 2. Main Function: Demonstrates how to use the factorial function with each u64 and i32 types by parsing strings to integers. Factorial Function: The factorial function is generic over any sort that implements the Numeric trait. If a duplicate word is attempted to be inserted, the perform returns with out inserting something. It then checks whether the end of the phrase was found and returns this information. Therefore, the perform returns a Result.

This operate uses sample matching to handle the base cases (when n is either 0 or 1) and the recursive case, the place it calls itself twice with lowering arguments. The choice of gating operate is often softmax. I definitely anticipate a Llama four MoE mannequin inside the next few months and am even more excited to watch this story of open fashions unfold. "failures" of OpenAI’s Orion was that it wanted a lot compute that it took over 3 months to train. Both took the identical time to answer, a considerably lengthy 10-15 seconds, as a detailed description of their methodologies spilled onto the display. Both models generated responses at virtually the identical tempo, making them equally reliable relating to fast turnaround. This brings us back to the identical debate - what is actually open-supply AI? While tech analysts broadly agree that DeepSeek-R1 performs at the same stage to ChatGPT - and even better for certain tasks - the field is moving fast. The mannequin notably excels at coding and reasoning tasks whereas utilizing considerably fewer assets than comparable models.

This operate uses sample matching to handle the base cases (when n is either 0 or 1) and the recursive case, the place it calls itself twice with lowering arguments. The choice of gating operate is often softmax. I definitely anticipate a Llama four MoE mannequin inside the next few months and am even more excited to watch this story of open fashions unfold. "failures" of OpenAI’s Orion was that it wanted a lot compute that it took over 3 months to train. Both took the identical time to answer, a considerably lengthy 10-15 seconds, as a detailed description of their methodologies spilled onto the display. Both models generated responses at virtually the identical tempo, making them equally reliable relating to fast turnaround. This brings us back to the identical debate - what is actually open-supply AI? While tech analysts broadly agree that DeepSeek-R1 performs at the same stage to ChatGPT - and even better for certain tasks - the field is moving fast. The mannequin notably excels at coding and reasoning tasks whereas utilizing considerably fewer assets than comparable models.

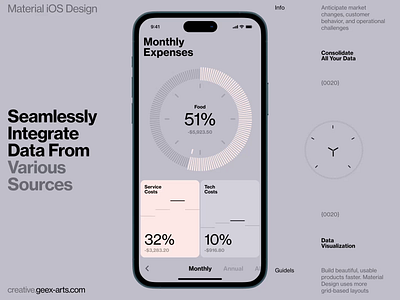

DeepSeek-V2.5 is optimized for a number of tasks, together with writing, instruction-following, and superior coding. CodeGemma is a group of compact models specialized in coding duties, from code completion and technology to understanding natural language, fixing math problems, and following directions. For now, the prices are far greater, as they involve a combination of extending open-source instruments just like the OLMo code and poaching expensive workers that can re-resolve issues on the frontier of AI. This is way lower than Meta, but it surely remains to be one of many organizations on this planet with the most entry to compute. The worth of progress in AI is way closer to this, at the very least until substantial improvements are made to the open variations of infrastructure (code and data7). Comprehensive Code Search: Searches by means of your whole codebase to seek out precisely what you need. Wired gives a complete overview of the latest advancements, functions, challenges, and societal implications in the sector of synthetic intelligence. While there's lots of uncertainty around a few of DeepSeek’s assertions, its newest model’s efficiency rivals that of ChatGPT, and but it seems to have been developed for a fraction of the associated fee.

Please pull the latest model and check out. One would assume this model would perform higher, it did much worse… The authors also made an instruction-tuned one which does somewhat better on just a few evals. We ran multiple large language fashions(LLM) locally so as to figure out which one is the perfect at Rust programming. Multiple estimates put DeepSeek in the 20K (on ChinaTalk) to 50K (Dylan Patel) A100 equivalent of GPUs. If DeepSeek might, they’d fortunately prepare on more GPUs concurrently. Read more on MLA right here. As the location handles the mounting curiosity and users start to hitch from the waitlist, keep it right here as we dive into every part about this mysterious chatbot. The success right here is that they’re related amongst American technology companies spending what is approaching or surpassing $10B per year on AI fashions. DeepSeek also says its model uses 10 to forty times less power than comparable US AI know-how. China’s home semiconductor business in international markets.55 China’s management has concluded that possessing commercially competitive industries typically is of better lengthy-term benefit to China’s nationwide safety sector than short-term navy utilization of any stolen expertise. What affect has DeepSeek had on the AI trade?

If you loved this article and you simply would like to acquire more info with regards to ديب سيك generously visit our web-site.

댓글목록

등록된 댓글이 없습니다.

> 고객센터 > Q&A

> 고객센터 > Q&A